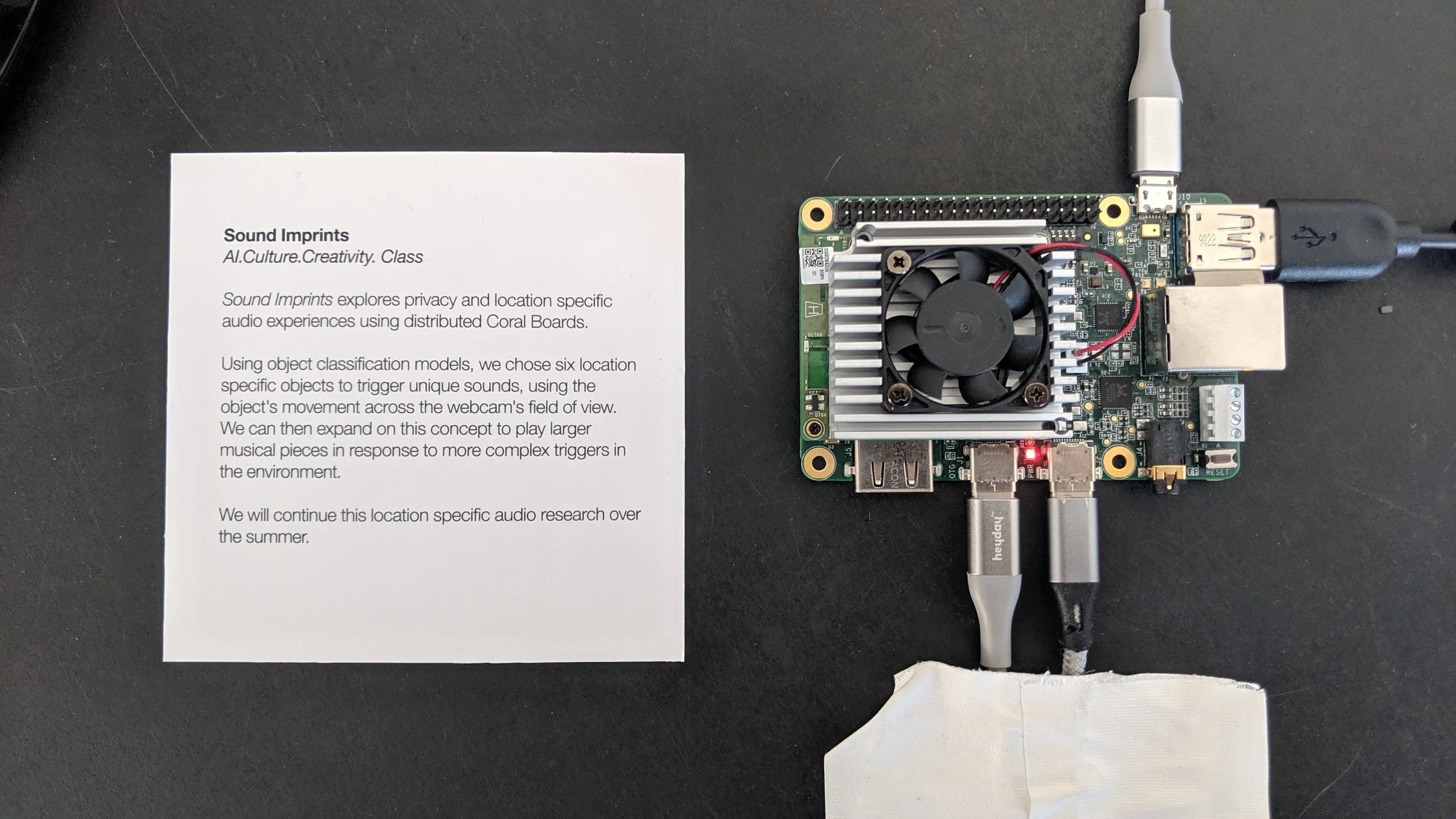

Sound imprints

AI.Culture.Creativity. Class

Exploration:

In this project, we explore privacy and location-based sound through distributed coral boards. Our larger goal is to use sound design to prototype the relationships and differences between centralized, distributed, and federated machine learning.

In our first iteration, the Coral Boards were distributed across environments, but the data and the models were located in a centralized cloud service (Google).

This prototype produces a sound output based on the presence of specific objects in a room, as well as the detection of faces. The objects we picked included an envelope, backpack, cup, laptop, cell phone, and safety pin. We specifically wanted to explore the idea of a “sound imprint” within intelligence systems.

the sound of the cup leaving is water flowing (audio is low),

DESIGN Considerations:

We imagined several design iterations based on face presence.

v. 1: sound is triggered when a specific object is assigned a classification and a face is detected.

v.2: sound continuously plays when a specific object is assigned a classification and a face is detected.

v.3: sound is triggered once an already classified object disappears, or an already detected face disappears.

DATASETS

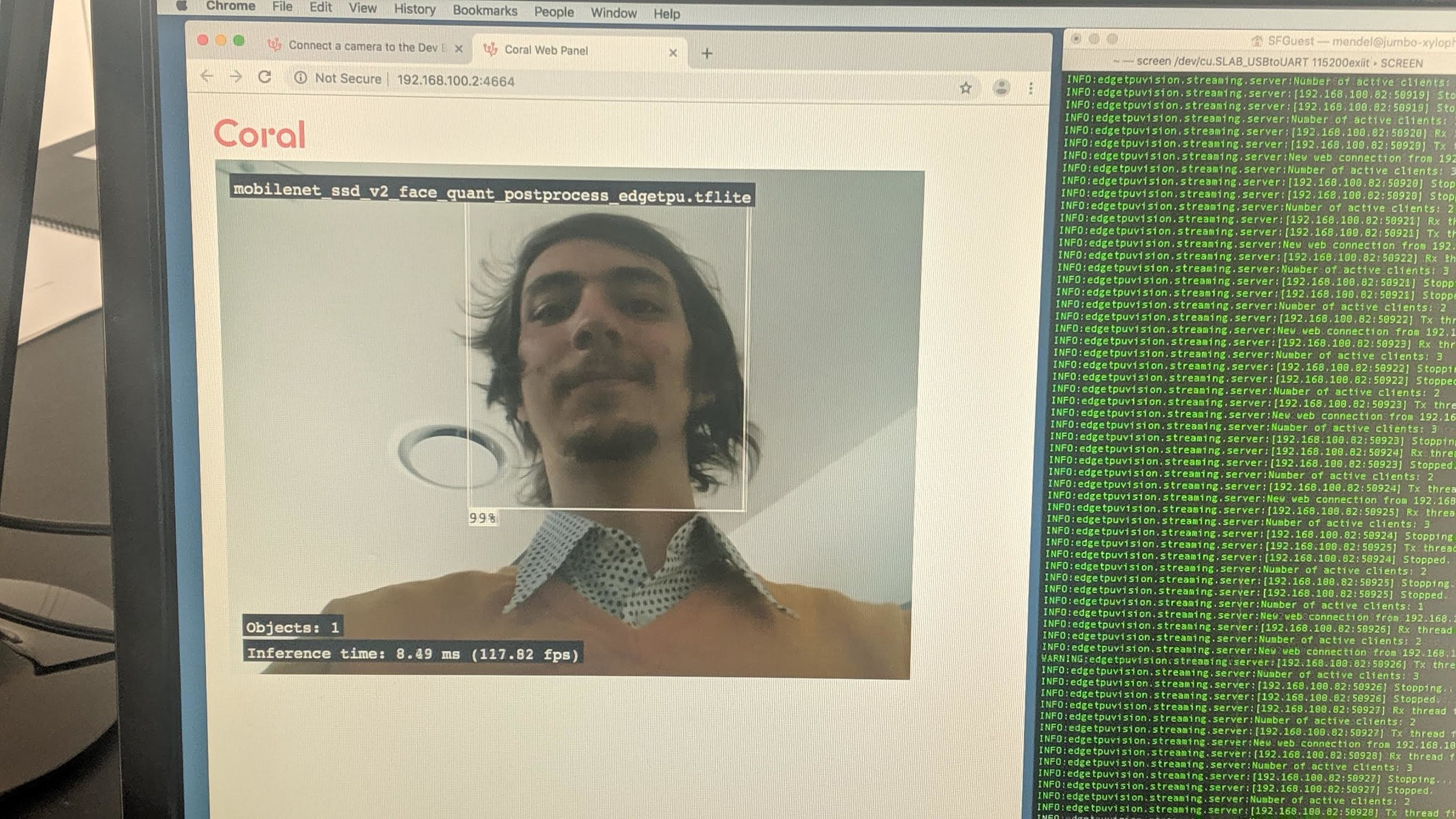

Object Classification: mobilenetv2_1;

Face Detection: mobile net_ssd_v2_face

MODELS

Object Classification: edgetpu_classify_

Face Detection: edgetpu_detect_

Output

Students sourced mp3 files to play in the browser. We discussed having artists or communities upload their own sounds to create site- and object-specific experiences that could reflect local cultures.

Next Steps

We will explore centralized and distributed approaches through our research with the goal to create location-based music experiences; we simultaneously will research ideas around privacy and sound across networks.

Consideration of most facial recognition software immediately prompts issues of privacy and surveillance. This field of exploration could be continued and strengthened if this project was developed further.

In its first iteration, our goal with “Sound Imprints” is to place these technologies within a context that evokes joy and presents the core concepts behind artificial intelligence as approachable for general audiences.In our second iteration, our goal is to explore federated learning by updating a specific model across devices.

We see this as a starting point to research machine learning models and edge computing when it comes to user privacy and hope to continue to work on this project with the CalArts and Feminist.AI communities.